OPT noted this phenomenon on his blog about a year ago:

"Media recently for the sport has put an emphasis on strength development in spite of promoting true “balance” in fitness and the general components of fitness. A sport where now the elite can qualify for the American open weightlifting championships but cannot qualify for a state-level high school cross country meet."

Although I won't seek to prove it in today's analysis, I think there is little doubt that the big lifts tend to get a lot more attention than general metabolic conditioning in CrossFit media. However, the question I will attempt to answer is whether the sport itself has gotten out of balance.

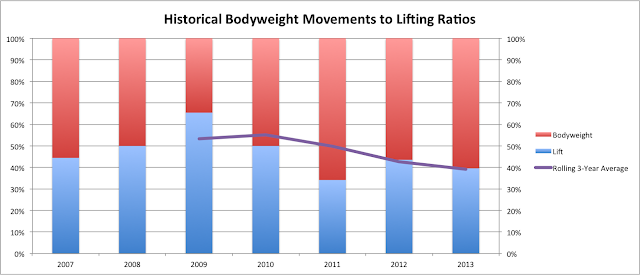

From the programming perspective, I showed in my recent post "History Lesson: An Objective, Analytical Look at the Evolution of the CrossFit Games" that while the metcons have become heavier and heavier over time, the overall balance of lifting and conditioning has not changed drastically in the past 7 years. In addition, there is roughly the same amount of emphasis on Olympic lifting now as there has been throughout Games history. In fact, there has actually been a shift away from the powerlifting-style movements like the deadlift and back squat. Running is and has always been the most common movement at the CrossFit Games.

However, there is a legitimate question about the intense focus on Olympic lifting and the lack of focus on running at the Regional level. And there is also no doubt that the CrossFit athletes have been getting more and more proficient at the Olympic lifts, as evidenced by the rising numbers in the 1-rep max events at the Games each year.

So let's ignore the programming for now and focus on the actual strengths and weaknesses of the athletes in our community. Before I do this, I want to re-visit the comment from OPT above. I love OPT and have probably watched every CrossFit.com video of his over the past few years, but I think the commentary that "the elite can qualify for the American open weightlifting championships but cannot qualify for a state-level high school cross country meet" is a bit misleading on a couple of levels:

- The American Open is not that competitive on a global scale. To qualify in the 85 kg weight class, you need a 266 KG total (http://0205632.netsolhost.com/2013NationalEventsQualifyingTotals.pdf). Yet at the 2012 Olympics, the 16th place finisher had a total of 315, or 18% higher. On the other hand, to qualify for the highest-level state cross country meet in Ohio (a competitive state where I used to cover sports), you need a time around 17:00. Considering these meets are run on rugged terrain rather than on a track, this time isn't that far behind the Olympic 5,000 meter times (13:52 was 15th place in the 2012 Olympic final). So I think it could be argued that qualifying for those two events are actually relatively comparable as far as difficulty.

- There are weight classes in Olympic weightlifting, yet there are not in CrossFit or cross-country. The top CrossFitters are not even in the same stratosphere as the lifters in the 105KG+ weight class. On the flip side, among runners above 200 lbs., I have to believe someone like Garrett Fisher would be considered elite.

But let's look at the numbers throughout our community. To do this analysis, I used the 2013 Open data, which was generously pulled and cleaned for me by Michael Girdley (girdley.com). This dataset has all the numerical information provided by athletes who competed in the Open (it does not include answers to the questions about diet, how long you've done CrossFit, etc.). Based on this self-reported data, I believe we can understand how CrossFit athletes from top-to-bottom compare to the world's best in a variety of lifts, running events and metcons.

To perform this analysis, I first limited the data to athletes under 40 who completed all five events (approximately 39,000 men and 23,000 women). Then I re-ranked all the athletes based on their rank across all 5 Open events and grouped them into 20 buckets based on this rank. Within each bucket, I took the average for each of the self-reported scores (Fran, Helen, Grace, Filthy 50, FGB, 400 meter run, 5K run, clean and jerk, snatch, deadlift, back squat, max pull-ups). For the timed events, I converted these to a pace (rounds per second, for instance, or meters per second). Then, I pulled in world records for each and compared the CrossFit community against those world records.

The charts below shows how the community compares to the world records*. For the lifting events, these are the world records without regard to weight class, since CrossFit does not have weight classes. To reduce clutter, I have grouped all metcons together, all runs together and all lifts together (pull-ups stayed in their own category).

On both charts, we can see that the community is generally closest to the world record when it comes to running events and (not surprisingly) metcons. When compared to the world record in the lifts, it becomes obvious how far behind the CrossFit world still is. Proud of that 200-lb. snatch? Congratulations, you are slightly below half the world record. (Note: I am proud of my 200-lb. snatch, and it took me 5 years to finally get there).

But let's also look at the Games athletes in particular and see how they stack up. Here is a table showing each event and how the average Games athlete stacks up compared to the world record.

Not shockingly, the Games athletes are near the top to the world record in the metcons (since generally the world record comes from this field), but again, note that they are much closer to the world records in the 5K run and the 400 meter run than they are in the Olympic lifts. And look at that back squat - not even close! (and before you ask, this is comparing against the raw world record in the back squat, which appears to be 450 KG as best I could tell)

It is interesting to note that the elite CrossFit men are closer to the Olympic lifting world records than the elite women, yet they are further from the 5K run record. This could have something to do with the background that many of the athletes had prior to CrossFit, but that's purely a guess at this point.

It is interesting to note that the elite CrossFit men are closer to the Olympic lifting world records than the elite women, yet they are further from the 5K run record. This could have something to do with the background that many of the athletes had prior to CrossFit, but that's purely a guess at this point.

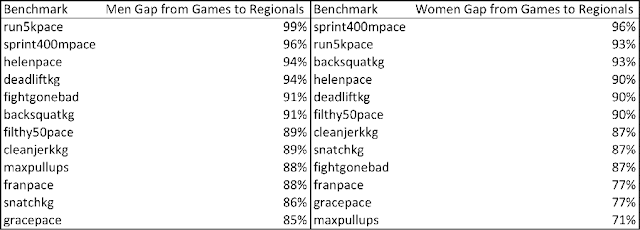

Another way to look at this is to understand where the Games athletes excel furthest beyond the rest of the community, and in particular, where they excel furthest beyond the rest of the Regional field. For both men and women, here is a look at how the top 5% of Open finishers (roughly the Regional field) compared to the Games athletes.

Here I think we start to see something interesting. While the Games athletes do not appear to be any further from the world record in the 5K run than they are in the Olympic lifts, they aren't that much better than the rest of the regional field when it comes to the running events. They also aren't that much better when it comes to the powerlifting movements. I think you could attribute at least partially to programming at the Regionals: we simply aren't testing much for running or powerlifting, so the athletes making the Games aren't necessarily that much better than the rest of the field in those areas.

On the flip side, look where the Games athletes do exceed their peers by a greater amount: the Olympic lift, the short metcons and pull-ups. It seems that explosive power and conditioning (over a relatively short time frame) are what tend to separate the Games athletes from the rest of the Regional field.

One last way to look at this is to see the gap between the Regional athletes and the median Open athlete**, which is defined as the athletes finishing in 45th-55th percentile in the Open among people under 40 who completed all 5 events. These median Open athletes are still generally fit individuals, they just aren't quite at the Regional level.

This table looks a lot like the prior one, meaning that what separates the Regional athletes from the average Open athletes is a lot like what separates the Games athletes from the Regional athletes.

Based on the analysis here, I believe that CrossFit athletes in general aren't bad runners or particularly tremendous lifters. However, the elite CrossFit athletes are significantly better lifters than the rest of the community, and yet they are not drastically better runners than the rest of the community. From this perspective, we do see a little bit of the bias that OPT was writing about. But overall, I don't think the specialization issue is as much of a concern as some might think.

Update 12/20: [I'd like to note that I don't believe that achieving 70% of the world record in the snatch is exactly as challenging as achieving 70% of the world record pace in a 400 meter run. However, the fact that CrossFit Games athletes are so much closer to the world record in the running events than they are in the lifts indicates to me that these athletes should not be considered specialists in the Olympic lifts who simply neglect running. One way to quantify this, which I'm hoping to look into more, is to put things in terms of standard deviations. I have looked at this for the snatch, clean & jerk, 5K run and 400 meter run for men, however. Using the standard deviation based on the same sample of Open athletes under 40, the Games athletes are approximately 6.0 standard deviations below the world record in the lifts but only 4.3 standard deviations away in the 5K run and 2.0 standard deviations away in the 400 meter run. This isn't a perfect method either, but again, it supports the idea that Games athletes aren't totally specializing in the Olympic lifts while neglecting their running.

However, I do see the same pattern as in the main body of my post when comparing Games athletes to the rest of the CrossFit field. Games athletes are only about 1.1 standard deviations better than the median in the 400 meter run, 0.7 standard deviations better in the 5K run, but approximately 2.5 standard deviations better in the Olympic lifts. So it seems that the same conclusions generally hold when doing the analysis this way.]

Update 12/21: [As a follow-up to the previous update, I looked at where the average Games athlete would fall in the spectrum of all Open athletes in each of the self-reported metrics. This was more difficult than it might seem because of the tremendous selection bias in the data (only about 20% of the men's field reported a 400m time, for instance, but about 50% reported a deadlift max). I tried to account for this by creating a "weighted" distribution, where each 5% bucket was only worth the same number of total athletes, regardless of how many missing values they had. After doing this, I found that the average male Games athlete is in the top 2% in the clean and jerk and snatch, and they were at least the top 6% in all other lifts or metcons. However, for the 400 meter sprint, they were only in the top 15%, and in the 5K run, they were only in the top 25%.

Note that the selection bias still can't be totally accounted for. It's probably fair to assume that the non-responders in general had worse scores than those that did respond, so maybe the Games athletes are actually even better than they appear here. However, it is definitely striking that the Games athletes are not that far beyond their peers in the runs, particularly the 5K. Still, it doesn't necessarily say they are bad runners, as you could argue that CrossFitters in general are good runners and therefore the Games athletes are still pretty good. It is clear, however, that Games athletes are outdistancing their peers substantially in the Olympic lifts, even though they are generally still well short of elite status.

I think a lot of this has to do with the background of many CrossFitters. Many, many people ran to stay in shape prior to finding CrossFit, but relatively few Olympic lifted. To really decide if you feel the sport of CrossFit has gotten too specialized, I think all of the preceding analysis has to be taken in together, including how far CrossFitters are from the world records as well as how the Games athletes compare to the rest of the field. I'm not sure there is really a clear-cut answer.]

Update 12/28: [Quick one here. I did the same analysis for the women that I did for the men on 12/21, and I found that the women's Games were slightly more dominant across the board. The average Games athlete would be in the top 3% for all lifts and metcons, the top 9% for the 5K run and the top 15% for the 400 meter sprint. Interesting that they were comparatively better than the men in the 5K, although actually about the same in the 400 meter sprint. I'm not sure I really have a good hypothesis for this at the moment.

Update 12/28: [Quick one here. I did the same analysis for the women that I did for the men on 12/21, and I found that the women's Games were slightly more dominant across the board. The average Games athlete would be in the top 3% for all lifts and metcons, the top 9% for the 5K run and the top 15% for the 400 meter sprint. Interesting that they were comparatively better than the men in the 5K, although actually about the same in the 400 meter sprint. I'm not sure I really have a good hypothesis for this at the moment.

Also, worth noting is that I looked into the response rates for each metric, and found that for women, the rate was between 7% and 12% for all runs and metcons, except Fran, which was 17%. The response rate was between 32% and 38% for all the lifts and 16% for max pull-ups.

For men, the rates were between 13-23% for all metcons, except Fran, which was 33%. The response rate was between 44% and 51% for all the lifts and 30% for max pull-ups.

This does indicate that there is a selection bias issue that has to be considered, but it's not as if it ONLY applies to the runs. Basically all the metcons and the runs had very low response rates, but the lifts had much higher response rates.]

*Here are the world records I used in this analysis, based on a combination of web research and self-reported PRs from the database:

Fran - 2:00 (men), 2:07 (women)

Helen - 6:13, 7:20

Grace - 1:14, 1:17

Filthy 50 - 14:05, 16:13

FGB - 520, 460

400 meters - :44, :50

5,000 meters - 12:37, 14:11

Clean & Jerk - 263 KG, 190 KG

Snatch - 214 KG, 151 KG

Deadlift - 461 KG, 264 KG

Back squat (raw) - 450 KG, 280 KG

Max pull-ups - 106, 80

**There is a significant amount of selection bias in these self-reported numbers, which is why I used the bucketing approach to account for it. In general, the people reporting their numbers for each lift/run/metcon are better at those lifts/runs/metcons than those who leave them blank. Also, for many of the metcons, less experienced athletes may not even have a PR. As an example of this bias, if you take a straight average of the clean and jerk across all women under 40 finishing all 5 events, it's about 134 pounds. But if you group the field by the 5% buckets as I have, take the average in each bucket, then average across all buckets, you get an average of 126 pounds, which I believe is more representative of the "true" average.